Recently, I ran into an issue where my team wanted to run their local Asp.Net project builds so that their IISExpress instances were visible to the other team members. This is a non-trivial setup, as a simple checkbox in the Asp.Net project properties window activates IISExpress to host the project, but does not make it visible outside of the local machine.

To configure IISExpress to answer to other names (besides localhost) the following steps are needed:

From an administrative command prompt issue the following command:

netsh http add urlacl url=http://mymachinename:50333/ user=everyone“mymachinename” is the hostname that you want to respond to, and 50333 is the port that Visual Studio assigned to my project. Your port may be different, change this value to match the port that Visual Studio assigned to you.

In the applicationhost.config file at %userprofile%DocumentsIISExpressconfigapplicationhost.config find the website entry for the application you are compiling and add a binding like the following:

<binding protocol="http" bindingInformation=":50333:mymachinename" />Finally, restart IISExpress to force the web server to re-load the settings you have just changed. This should allow the service to answer to the appropriate host.

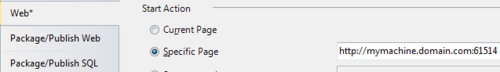

However, if you would like to configure Visual Studio to launch and browse to “mymachinename” instead of localhost, you need to change 1 more setting in your web project’s config. On the “Start Action” section, set this to start with a ‘Specfic Page’ and key in the appropriate full machine name and port combination in the textbox to the right.

This preference entry is saved in the YOURWEBPROJECT.csproj.user file. By making this decision, you will allow the .csproj file to be checked in to your source control without impacting the other members of your team.